Optical Network Expert Series: Applying AI to maximize capacity and QoT in optical networks

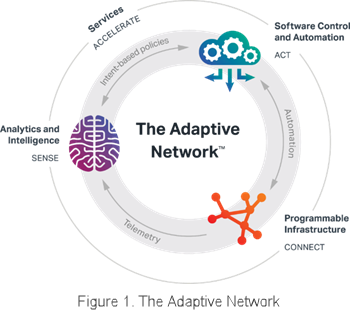

Artificial Intelligence (AI) is such a familiar concept these days it has become something of a buzzword. When we apply AI to an optical network, the goal is to algorithmically determine the best network actions and associated rewards. In our specific use case of delivering a near zero margin network, the reward is to increase network capacity while maintaining the desired quality of transmission (QoT). When applied in the context of a closed-loop SDN-controlled system, such as the Adaptive NetworkTM, AI can be used to enable a more autonomous network. The result is a software application that can learn when and how to perform actions on the network equipment that makes up the programmable optical infrastructure, to reach and maintain an optimal operating state.

Artificial Intelligence (AI) is such a familiar concept these days it has become something of a buzzword. When we apply AI to an optical network, the goal is to algorithmically determine the best network actions and associated rewards. In our specific use case of delivering a near zero margin network, the reward is to increase network capacity while maintaining the desired quality of transmission (QoT). When applied in the context of a closed-loop SDN-controlled system, such as the Adaptive NetworkTM, AI can be used to enable a more autonomous network. The result is a software application that can learn when and how to perform actions on the network equipment that makes up the programmable optical infrastructure, to reach and maintain an optimal operating state.

Using AI and ML in optical networks

Today there is on-going research of various machine learning techniques for the purpose of network automation, including Reinforcement Learning (RL). RL has received a lot of attention through its success in solving complex goal-seeking problems, such as the well-known AI AlphaGo program. Although there are various RL techniques used today, the following example will focus on Q-learning applied to network optimization.

Q-learning refers to the iterative process used to create and apply intelligence in a closed-loop system. It uses telemetry data to determine an operational “state” of interest in the network and then calculates the “rewards” that can be achieved by performing various actions that influence the operational state. The algorithm then decides which action should be taken on the network to bring it to the next level of performance, which is expected to maximize the cumulative reward.

As with all machine learning, Q-learning requires training. In this use case, it learns and improves through iterations to accurately determine how specific network actions, applied in the network, will yield specific performance outcomes. For example, in a greenfield deployment, the operator could let the network operate with control traffic (without real customer traffic) to let the RL learn by trial and error. If this is not possible, the RL can learn from historical data using an imitation learning strategy. Alternatively, if available, the RL algorithm can be trained using a network simulator.

Ensuring QoT with Reinforcement Learning

Although operating a network at near-zero margin increases the risk of short-term outages on individual channels in the network, the benefits of this strategy far outweighs the risk. With this approach, the network will be able to deliver a greater net capacity than it would under routine operating conditions, even when considering the risk of intermittent dropped connections. Even though data connection reliability has increased, especially in the core of the network, applications have become increasingly intolerant to these types of interruptions. This is where it is beneficial to deploy an intelligent multi-layer controller to predict and adapt to such changes, preventing as much packet loss as possible.

Figure 2. Application of reinforcement learning on a multi-layer packet-optical network

Ciena’s Blue Planet Analytics is an example of RL applied to a multi-layer packet-optical network to prevent packet loss:

- As shown above, the operator has a view of the allocated bandwidth, transmitted bytes, and dropped bytes for three competing services.

- Services 2 and 3 have priority 10, whereas Service 1 has lower priority 1.

- To the left of the vertical dashed line is the time before activation of the RL application, while to the right is after activation.

- The RL algorithm acts to minimize dropped packets from high-priority services while maximizing overall throughput.

This is a perfect use case to show how the application of RL allows the network to adapt to changing traffic patterns and bandwidth availability increasing the efficiency of the network.

Applying AI and ML to realize the benefits of the Adaptive Network

Network providers are under pressure to meet increasing capacity demands while continuing to lower the cost per bit of transport. This blog series focused on an innovative approach that is being considered for the economic viability of future optical networks: maximizing the delivered capacity of optical transponders by mining the SNR margin to a near-zero level. AI is already playing an important role by providing analytics and insight from vast amounts of network data to reach an optimal state. By applying machine learning techniques and closed-loop automation to optical networks, operators may be able to leverage the benefits of near-zero margin networks much sooner than one may think.

Check out the other installments in the blog series:

- Intro: How near-zero margin networking changes network economics

- Part 1: Designing programmable infrastructure to achieve near-zero margin networks

- Part 2: Leveraging network analytics for unprecedented control and monitoring

Or want to get a more in-depth understanding of this new approach now? Download the full paper "Practical Considerations for Near-Zero Margin Network Design and Deployment" today.